We’ve long championed subtitles, but dubbing has only gained popularity in an increasingly global entertainment industry. And what about those movies where Americans are supposed to be Italian, or French, but they’re speaking English with an accent that might be generously described as “continental”?

Israeli AI dubbing company Deepdub says it has an app for that. The company already generates dubs for local-language content in over 130 languages, but Accent Control uses AI to allow a character’s accent to be modified — or retained — when dubbing content into another language.

“Most of the content — not just entertainment, but education — is not accessible to most of the people around the world, especially if you go down the line to the kids,” said Deepdub CEO and cofounder Ofir Krakowski. “They cannot read themselves, so they actually cannot access knowledge. Our aim is actually to democratize the content.”

Voice actors under the TV and Film collective bargaining agreement (and the animation agreement currently out for ratification) have protections that require a studio to notify the guild if they’re using AI. The studio is also responsible for making the actor aware and giving consent. While Deepdub isn’t a SAG-AFTRA signatory, many would-be clients are.

In a Deepdub demo video, the app instantaneously modulates the natural voice of an Israeli actress to sound as if she speaks English with a British, French, Australian, American, or Italian accent. It also recognizes the differences location makes: Accent Control adjusts for Parisian French, Belgian French, or Canadian French, and can increase or reduce the amount of accent.

Krakowski points to Ridley Scott’s “Napoleon,” in which all actors speak English with a variety of inflections. Krakowski said star Joaquin Phoenix could have a French accent while (technically) keeping his performance.

Accent Control also works the other way: Imagine retaining an iconic accent for a character like Hannibal Lecter, Shrek, or Jack Sparrow while translating the film to Polish. It’s also useful for corporate clients who want their CEOs speaking in different languages in company videos while cloning their voices.

What’s more likely than making Phoenix sound French is providing more local-language content with dubs that indicate the character’s country of origin. Krakowski said AI dubbing costs 50-70 percent less than traditional voiceover dubs, at five times the speed. That’s because rather than have actors record every line of dialogue, AI lets multiple teams work concurrently.

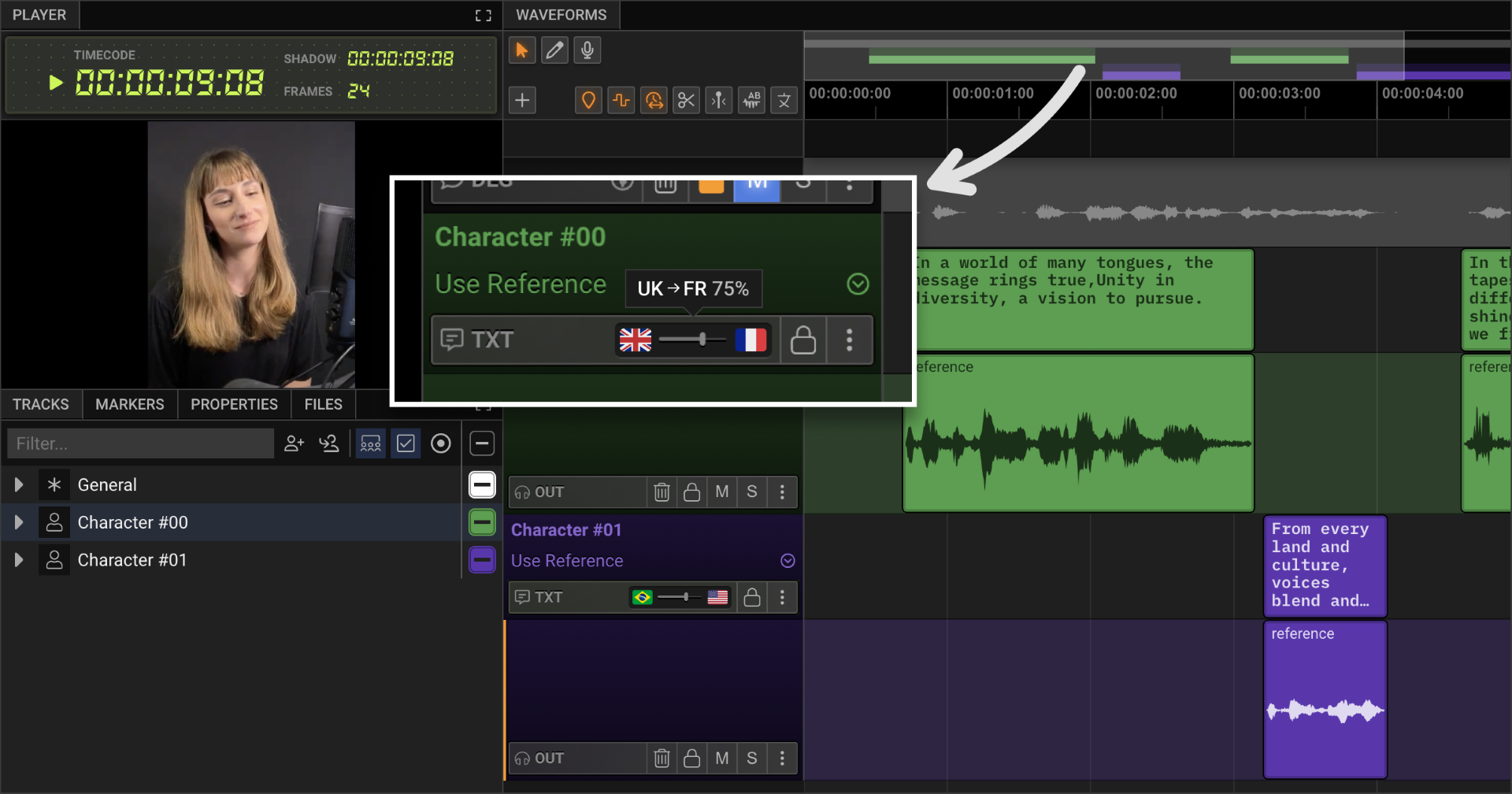

Deepdub accent models are trained on proprietary datasets and commercial rights are built into its licensed voices. The company also has a talent platform that lets actors make their voices available to content creators and other dubbing studios that pay to use Deepdub’s editing software.

All of this is more nightmare fuel for voice or dubbing actors who fear AI will take their jobs. Krakowski said he believes dubbing AI retains or creates more jobs than it eliminates, with actors getting more work because their performances can be more easily translated to what a local audience wants.

Would SAG-AFTRA agree? An individual familiar with the guild’s thinking around AI told IndieWire they were skeptical of how many new jobs this would create. More likely, they said, is creating more revenue for the sole performer whose voice can now be dubbed in multiple accents.

The individual pointed to tech from AI company Flawless as an example of how AI can manipulate an actor’s performance, such as lip syncing, without costing jobs. Another company, Adapt Entertainment, works with a film’s original actors and uses AI for facial re-enactments to create local-language content.

However, SAG-AFTRA also struck a deal with Australian AI company Replica Studios earlier this year to create digital replicas for video game voice actors. That move created confusion and pushback among some members.

Deepdub prioritizes working with voice actors from the specific regions they target; a rep for the company said the dubs need intricacies that a non-native speaker would lack. Even with machine translation, someone still needs to review the material — say, to capture the nuance of a joke — to ensure a dub is broadcast ready.

“It’s not fully automatic,” Krakowski said. “You need a human to curate the end results.”

Deepdub also supports lip syncing, but Krakowski said studios don’t often request it. It’s easy for streamers to offer numerous audio versions of a movie in different languages, but creating multiple video versions is a much heavier lift. Deepdub instead tries to adjust the dialogue pace or tweak the script to best match the performer.

For “Squid Game” and other major properties, Krakowski said studios still prefer to use humans. However, Krakowski said Deepdub created the English-language dub on Hulu series “Vanda;” it’s also been used on the MHz Choice streaming platform.

Although YouTube and social media videos make extensive use of AI dubbing, AI researcher Mike Seymour at the University of Sydney said only a small percentage of all media and entertainment content uses AI dubbing. Quality entertainment is based on a person understanding a premise and the subtext of a scene, and computers still aren’t good at that.

“That’s why actors are so good at their jobs,” Seymour said. “At one end of the spectrum there will be uses for these things… but where we’re at, 100 percent, we’re not going to replace actors.”

Deepdub plans to combine Accent Control with its existing translation capabilities to create higher-quality dubs in any language. Deepdub is a major player in a crowded space of international dubbing companies, but it recently secured a $20 million investment to fuel growth.

“The industry is definitely changing, and we need to adapt,” Krakowski told IndieWire. “The approach to this is work with talent, work with studios, and work with the industry to build something that is sustainable.”