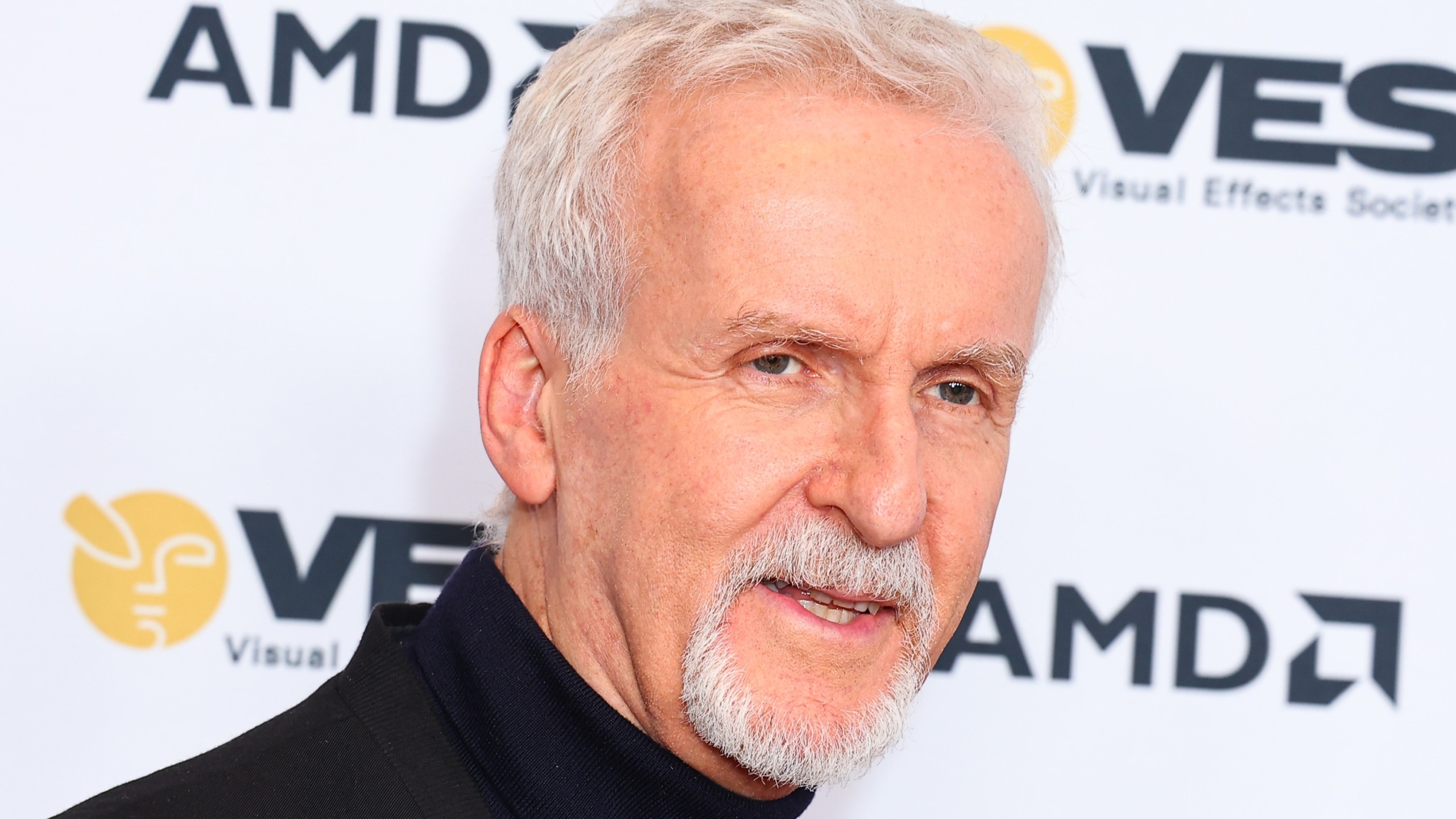

James Cameron is doubting the merits of an AI-made film.

The “Titanic” Oscar winner told CTV News that while he “absolutely shares their concern” over the rise of AI, when it comes to the impact of the new technology on Hollywood, Cameron is less worried.

“It’s never an issue of who wrote it, it’s a question of, is it a good story?” Cameron said. “I just don’t personally believe that a disembodied mind that’s just regurgitating what other embodied minds have said — about the life that they’ve had, about love, about lying, about fear, about mortality — and just put it all together into a word salad and then regurgitate it. I don’t believe that have something that’s going to move an audience.”

Filmmakers like Nicolas Winding Refn, Charlie Brooker, and Joe Russo have all recently addressed working with artificial intelligence during the scriptwriting process. Cameron noted that he “certainly wouldn’t be interested” in AI writing his scripts, but that only time will tell what the true impact of AI is on Hollywood.

“Let’s wait 20 years, and if an AI wins an Oscar for Best Screenplay, I think we’ve got to take them seriously,” Cameron said.

As for the real-world dangers of AI on a warfare level, Cameron pointed to his own original film “The Terminator” that he penned in 1984.

“I warned you guys in 1984, and you didn’t listen,” Cameron said. “I think the weaponization of AI is the biggest danger. I think that we will get into the equivalent of a nuclear arms race with AI, and if we don’t build it, the other guys are for sure going to build it, and so then it’ll escalate.”

Cameron added, “You could imagine an AI in a combat theater, the whole thing just being fought by the computers at a speed humans can no longer intercede, and you have no ability to de-escalate.”

“Oppenheimer” writer-director Christopher Nolan similarly questioned the collective impulse to attribute “godlike characteristics” to AI in a recent Wired cover story, calling the true danger “the abdication of responsibility.”

“I feel that AI can still be a very powerful tool for us. I’m optimistic about that. I really am. But we have to view it as a tool,” Nolan said. “The person who wields it still has to maintain responsibility for wielding that tool. If we accord AI the status of a human being, the way at some point legally we did with corporations, then yes, we’re going to have huge problems.”